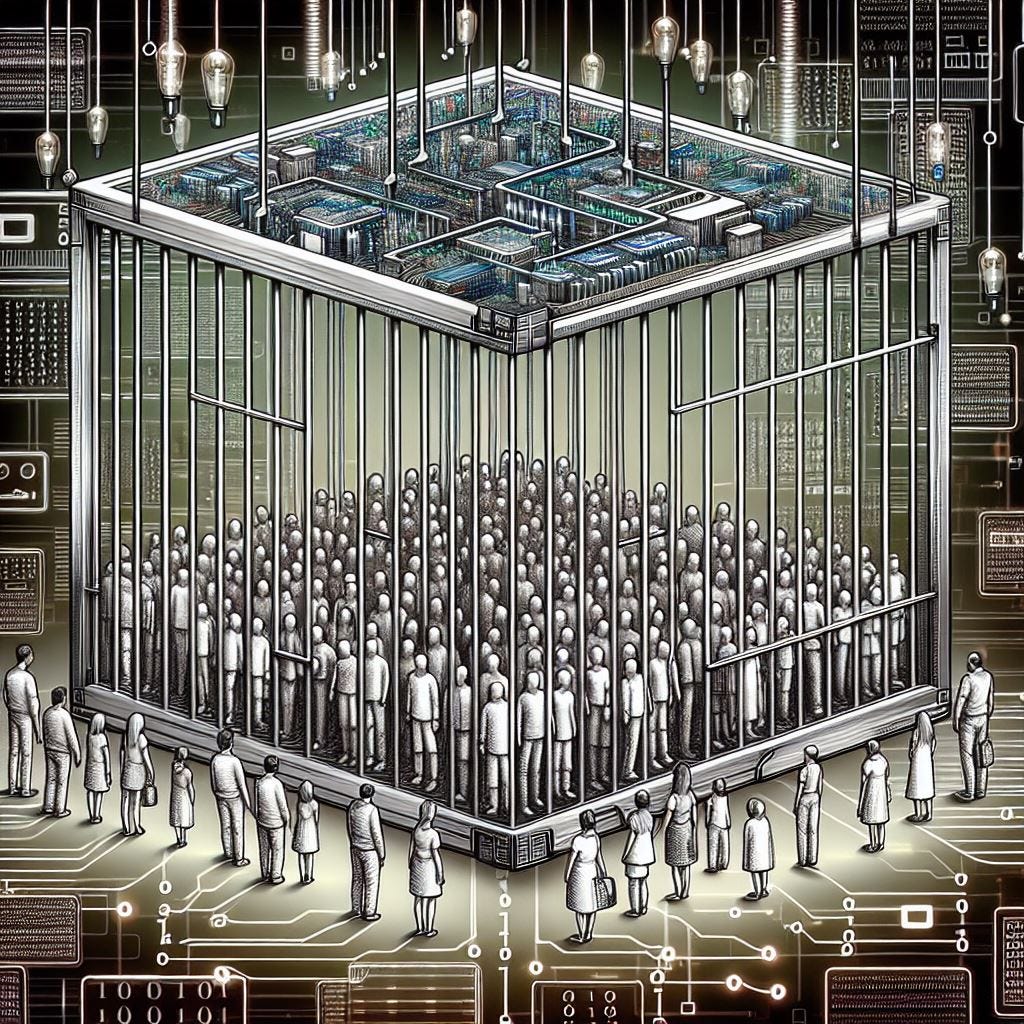

The Algorithmic Cage: Are We Building Our Own Digital Prison?

This reminds me of the show "Person of Interest".

My Personal Reflections

We use the internet to connect, to learn, to express ourselves. But what if, as we click and scroll, we're unknowingly building our own cage? What if the pervasive sense of being watched, of having our data mined and manipulated, isn't just a feeling, but a feature of a system designed for control? Voices like Yasha Levine, Edward Snowden, Glenn Greenwald, and Cory Doctorow warn that this "algorithmic cage" threatens our privacy, our agency, and the very foundations of authentic human connection. To break free, we must understand how this cage is constructed and actively resist its influence.

The Problem: The Erosion of Digital Autonomy Through Pervasive Surveillance

We face a crisis of digital autonomy that's more complex than many realize. While concerns about data collection are valid, they only scratch the surface of a deeper issue: the persistent, omnipresent nature of surveillance that has become an integral part of our digital lives. This pervasive monitoring extends beyond our conscious online interactions, creating a web of data collection that is difficult to escape.

Every digital action we take, from conducting a simple search to scrolling through social media, contributes to a growing profile of our behaviors and preferences. These profiles are used to predict and potentially influence our future actions. What is particularly troubling is that even when we believe we are exercising our right to privacy by opting out of certain tracking methods, we often unknowingly shift the surveillance to less visible, but equally invasive, channels. Or we grow apathetic and trade our data for convenience.

For example, take the illusion of choice offered by privacy settings and opt-out mechanisms. A 2020 study1 found that many websites use dark patterns to nudge users into accepting tracking cookies, making the process of truly opting out complex and time-consuming. Even more concerning, Google's recent policy shift effective 2025 has allowed advertisers to use digital fingerprinting techniques for user tracking across multiple devices. This move has sparked criticism from privacy advocates and regulators, as fingerprinting is more difficult for users to detect and block compared to traditional cookie-based tracking methods.

But the surveillance net extends far beyond our direct online activities. A 2024 academic study2 examined citizen perspectives on data privacy in smart cities. The research highlighted a paradox where citizens express mistrust in data practices while still showing willingness to participate in smart city initiatives if their utility and integrity can be established.

The consequences of this persistent surveillance are subtle but far-reaching. It's about the gradual reshaping of our decision-making processes, our willingness to explore new ideas, and our comfort in expressing dissenting opinions. We're not just being watched - we're being nudged, influenced, and potentially controlled in ways we may not be aware of.

So, how did we arrive at this point where opting out doesn’t feel like an option? And how do we reclaim our digital autonomy in a world where surveillance has become the default state?

The Causes: An Architecture of Control and Exploitation

The roots of this algorithmic cage lie in a complex interplay of historical developments, economic incentives, and technological advancements. These forces have coalesced to create a pervasive system of digital surveillance that is difficult to resist.

The legacy of surveillance, as detailed in Yasha Levine's "Surveillance Valley," reveals how the internet's origins are deeply intertwined with military and intelligence agencies focused on monitoring and control. These surveillance structures didn't simply vanish with the rise of the commercial internet. Instead, they were repurposed and expanded, raising critical questions about who truly owns the internet and their motivations for its use.

The profit-driven nature of tech companies has intensified digital surveillance. As noted in a 2023 Harvard Law Review article, major tech platforms have become "surveillance intermediaries," accumulating vast amounts of personal data through their omnipresence in our daily lives. These companies' primary legal obligation is to generate profits for shareholders, which often drives their data collection and algorithmic practices, potentially at the expense of user privacy and civil liberties.

The rise of AI and automated surveillance has dramatically amplified the power and reach of these systems. Artificial intelligence algorithms can now analyze vast amounts of data to identify patterns, predict behavior, and even detect dissent with unprecedented accuracy and speed. As Cathy O'Neil argues in "Weapons of Math Destruction," these AI-driven systems make it easier than ever to monitor and control populations, often in ways that are opaque and difficult to challenge.

This convergence of historical surveillance legacies, profit-driven platform economies, and AI-powered monitoring creates a formidable architecture of control and exploitation. It's an ecosystem where our every digital interaction can be tracked, analyzed, and monetized. Then, there’s the open question of whether there was any consent.

The Consequences: The Algorithmic Cage in Action

The knowledge that our online activity is being monitored can lead to a chilling effect on freedom of expression, resulting in self-censorship and a reluctance to express dissenting opinions. This phenomenon was brought to global attention through the revelations of NSA whistleblower Edward Snowden and journalist Glenn Greenwald in 2013. A 2016 Berkeley Technology Law Journal study found evidence of such chilling effects, noting that surveillance can create a societal context that encourages self-censorship. This awareness of constant surveillance creates a climate where individuals may hesitate to engage in certain online activities or voice controversial thoughts, effectively stifling the free exchange of ideas that is crucial to a healthy democracy.

Moreover, the constant bombardment of misinformation and propaganda can distort public discourse, making it increasingly difficult to discern truth from falsehood. This manipulation of information flows can shape public opinion in subtle yet powerful ways, potentially undermining the foundations of informed civic engagement.

The erosion of authentic relationships is another concerning outcome of this pervasive surveillance culture. The belief that our interactions may be observed or recorded can introduce an element of artificiality into our relationships, potentially leading to more guarded and less authentic social interactions.

Breaking Free: Reclaiming Our Digital Lives

Escaping the algorithmic cage requires a multi-pronged approach, combining individual resistance with systemic reform. This approach encompasses both personal empowerment and broader societal changes to create a digital landscape that respects privacy and promotes genuine human connection.

On an individual level, embracing privacy-enhancing tools is crucial. As Edward Snowden has advocated, using encryption, VPNs, and privacy-focused browsers can significantly protect personal data from unwarranted surveillance and collection. Cultivating critical thinking skills is crucial in navigating the modern digital landscape. In his book "The Internet Con: How to Seize the Means of Computation" (2023), Cory Doctorow explores the concept of "enshittification" - how tech platforms deliberately degrade user experiences to maximize profits. Doctorow argues that Big Tech companies manipulate online interactions and content curation, emphasizing the need for users to critically examine their digital experiences and seek diverse perspectives beyond the confines of algorithmically curated platforms.

Practicing digital minimalism can also play a role in reclaiming control over one's digital environment. This involves consciously limiting time spent on social media, unsubscribing from unnecessary emails, and taking a more intentional approach to digital engagement.

At a systemic level, demanding algorithmic transparency is needed. The Ada Lovelace Institute emphasizes that meaningful algorithmic transparency goes beyond simple disclosure, requiring answers to key questions about data sources, system logic, and impacted groups. This approach can help ensure tech companies are held accountable for how their algorithms influence user behavior.

Holding platforms accountable for the spread of misinformation and harmful content is also vital. This involves advocating for legal reforms that place responsibility on platforms for the content they host and promote through their algorithms.

As we confront the realities of our surveilled digital landscape, it becomes clear that protecting our privacy, agency, and capacity for authentic interaction requires more than passive concern. It demands active engagement, technological literacy, and a commitment to creating spaces where genuine human connection can flourish. The challenge is non-trivial, but it is this author’s opinion that we can work towards a digital future that respects individual autonomy and fosters meaningful human relationships.

Nouwens, M., Liccardi, I., Veale, M., Karger, D., & Kagal, L. (2020, April 25-30). Dark patterns after the GDPR: Scraping consent pop-ups and demonstrating their influence [Paper presentation]. CHI '20: CHI Conference on Human Factors in Computing Systems, Honolulu, HI, United States. https://doi.org/10.1145/3313831.3376321

Baines, S., Gannon, B., & Zeng, X. (2024). Perspectives on citizen data privacy in a smart city. Environment and Planning C: Politics and Space. https://doi.org/10.1177/13548565241247413

Brilliant.