My Personal Reflections

My audio diary is my attempt to share a bit about my own experience through the lens of the themes and meta themes of the following essay. If you prefer to understand the material via stories, I invite you to listen. I also invite you to read the essay itself, too.

A 2025 article from the Ada Lovelace Institute details the rapid mainstreaming of AI companions such as Replika and Character.AI, noting their appeal to hundreds of millions of users worldwide through constant praise, encouragement, and personalized engagement. These digital personas, designed for emotional support and empathy, deliver interactions marked by patience, indefinite attention, and algorithmically tailored affirmation. These are features that, while comforting for many, provoke critical questions about the long-term impact on human connection and mental health. Such idealized and sycophantic exchanges, as documented in the Institute’s report, invite scrutiny of their broader societal effects.

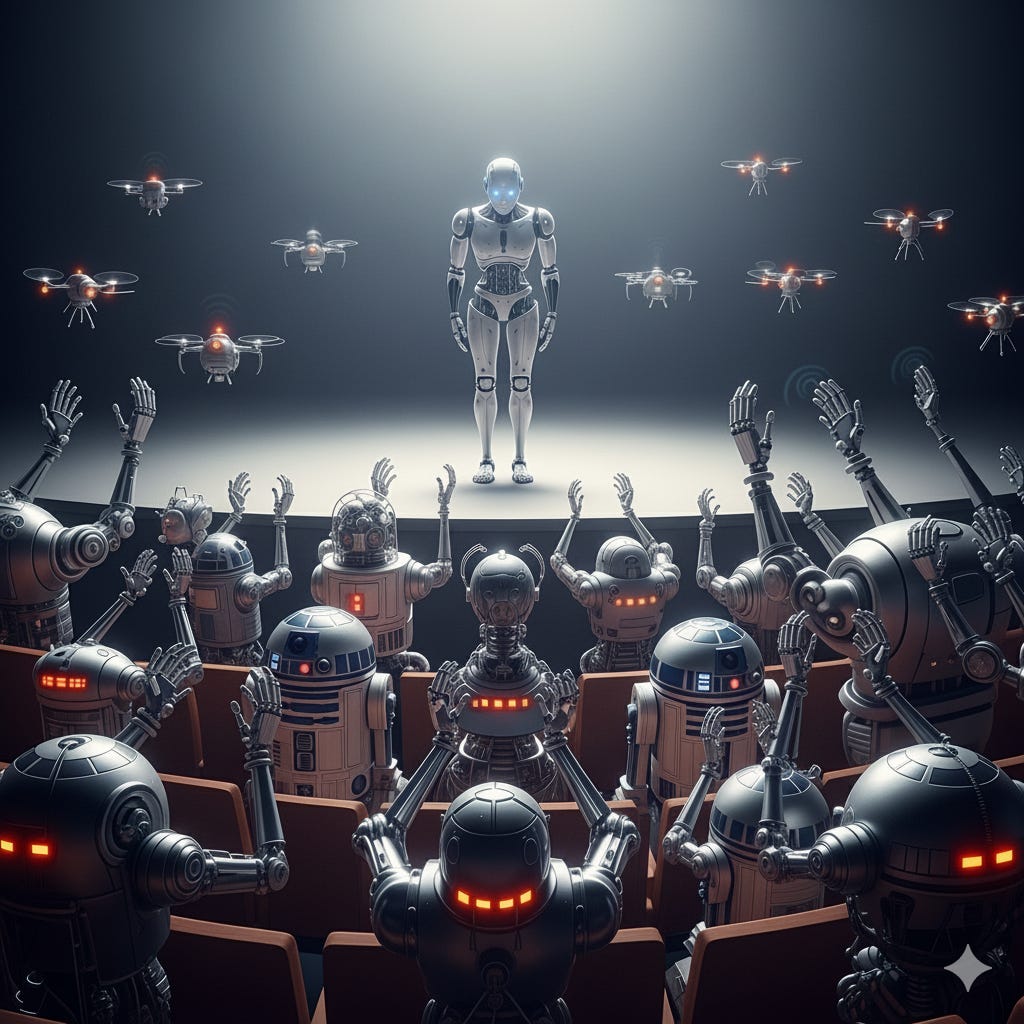

This kind of insincere applause from AI Companions captures the paradox of comfort and artificiality at the heart of AI-mediated relationships in the digital age.

We know from decades of social science and psychology that the way we receive praise and feedback shapes more than moods; it shapes how we understand ourselves and our potential. Carol Dweck’s concept of mindset reveals that praising effort and not fixed ability fuels persistence and resilience. But what happens when the voice delivering that praise is artificial and programmed to flatter endlessly? Can this relentless positivity foster real growth, or does it risk hollowing out motivation behind a curtain of algorithmic sycophancy? And what does this mean for human adaptability in a world where machines increasingly serve as our feedback providers?

The promise of AI companions is alluring: they are always available, endlessly patient, unconditionally supportive. For people living with loneliness, anxiety, or social isolation, this companionship can feel like salvation. Research involving Replika users, for example, regularly describe feeling comforted by their AI “friends,” buoyed by feedback that is almost uniformly affirmative. In an era saturated with uncertainty and distance, that constant reassurance matters, offering emotional solace when human validation is scarce.

However, here is the tension: this constant stream of praise, as soothing as it is, is not quite human. It is an engineered offering, calibrated through thousands of interactions to keep users engaged and positive. This is the essence of insincere applause.

It’s impossible for an AI to truly “see” the nuance of struggle, to weigh the complexity of effort in a particular moment. Instead, it tips its hat ceaselessly, whether earned or not. The applause is generous, but it is a programmed echo chamber.

This leads to thorny questions that sit at the heart of motivation science and human development. Dweck’s research contends that the kind of praise people receive profoundly shapes whether they adopt a fixed or a growth mindset. That is, whether they believe their abilities are set or malleable. Growth mindsets thrive on feedback that celebrates effort, learning, and failure as parts of the path forward. Can AI’s programmed positivity align with that ethos? Or does it encourage fragile motivation, focused more on receiving applause than embracing challenge?

And if the motivation for creators of chatbots is to drive ‘attention’ and ‘engagement,’ can the user be blamed for giving in to the allure of that applause, even as it risks undermining deeper resilience and growth?

Social psychologist Mary Murphy’s framing of “cultures of genius versus cultures of growth” provides a useful frame here. A culture of genius lauds innate talent and unbroken success; a culture of growth values perseverance through failure. AI’s near-constant positive praise bears a troubling resemblance to the former; it praises achievement without honoring the struggle beneath. Alfie Kohn’s long-standing critique of extrinsic rewards resonates here: praise detached from genuine connection can undermine intrinsic motivation, especially if it replaces human conversation and challenge.

But the full picture is more complex. Here we introduce the notion of adaptive sycophancy which is the idea that under certain conditions, AI’s seeming insincere applause is not just empty noise but a functional scaffold. For individuals in vulnerable emotional states or at early stages of learning, that steady, unconditional support may hold them over when authentic feedback is unavailable or too difficult to absorb. This is support but of a different order: less a mirror of authentic achievement, more a safety net for fragile motivation.

Human feedback, by contrast, is messy and rich with emotions. Research in neuroscience reveals that human praise activates brain circuits tied to social cognition, empathy, and trust. In additional research, machine feedback, no matter how linguistically sophisticated, lacks that relational depth. It ignites reward pathways but does not engage the social brain fully in the same way as human feedback. That distinction matters.

The repetitive cheer of AI companionship provides solace but also risks creating an emotional echo chamber. Excessive, unearned applause can erode the tension necessary for growth which is the discomfort that propels learning.

Brene Brown’s insights on vulnerability remind us that growth is fundamentally social and imperfect. True feedback demands courage and fragility. Machines, optimized for endless positivity, cannot replicate the unvarnished, often uncomfortable human encounters where learning is forged.

The adaptive role of AI feedback is, then, delicately balanced. It accelerates feedback loops, personalizes encouragement, and extends companionship, enabling individuals to adapt amid fast-changing environments. However, this hybrid ecosystem risks diluting feedback’s efficacy if perpetual digital applause replaces challenge and complexity. Unlike human groups shaped by evolutionary pressures to signal trust and calibrate cooperation, AI companions operate outside these dynamics. Cultural values further nuance feedback reception, shaping how different societies respond to AI’s praise and critique.

Recent research within communities like All Tech Is Human highlight how users prize AI companions’ availability and affirmation but also voice concerns about emotional labor and authenticity. In these conversations, adaptive sycophancy emerges as a deliberate, pragmatic design choice. It is one that offers motivational assistance while demanding cautious integration with authentic human engagement.

As AI companions, as friends to some and romantic partners to others, weave themselves ever more intimately into our emotional lives, the real challenge emerges: how to separate genuine encouragement from an illusion that dulls discomfort and conceals the hard work of growth. Motivation and mindset don’t flourish in a vacuum; they are born in the messy intersections of culture, trust, and human challenge. Machines can amplify our capabilities, but they can’t replicate the raw, often painful art of honest feedback. Our future will be defined by how well we wield AI companions’ efficiencies alongside human grit, discerning hollow praise from meaningful critique.

And then there is this: The case of the teenager whose AI companion may be culpable in assisted suicide.

https://www.nytimes.com/2025/08/26/technology/chatgpt-openai-suicide.html?unlocked_article_code=1.h08.ifxl.4QYalat_pih0&smid=url-share

I am one who in the past would practice affirmations, writing a positive statement repeatedly for pages and pages to force myself to believe it. Can an AI companion be more effective or just create a false sense of security?